Trials of training a Teddy Bear to understand what I ask

April 19, 2016 |

Recently I rewrote my Teddy Bear demo. The first version was Alex, who prompted the child about their emotions in a rather crude way. Turn a dial to select the option, and then press a button to commit that emotion to an application in the cloud. This worked, kind of. It demonstrated the concept of being able to capture and track a child’s emotion, and alert parents of potential problems.

But as I kept playing with Alex, the interaction started feeling more awkward. It was very limited. And I couldn’t, well, train Alex to interact in more complex ways.

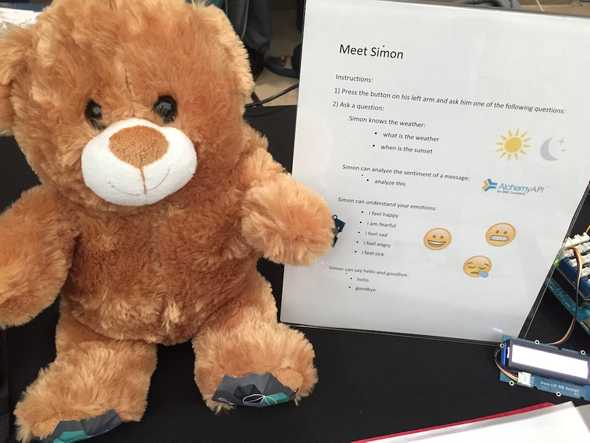

Simon is a v2 prototype and utilizes speaking capabilities as the main mechanism for interaction. First, a microphone captures the audio from the child, sends it up to IBM Watson’s Speech to Text service in IBM Bluemix where it is converted to text. The text is then processed in a Node-RED application in the Cloud. Some logic is performed to generate a response, and skipping ahead, Simon speaks the response using Watson’s Text to Speech service via a Bluetooth speaker.

Sounds pretty simple. Except that middle part. Taking in input with such a wide range of possibilities is actually pretty daunting for such a young teddy bear. Remember how hard it was to understand what adults said when you were a child? It is kind of like that. As the proud parent (ehem, the responsible developer) of Simon, I have high hopes of big advancements in his future. Okay, enough of that!

I started by training Simon with the weather. Given data from the Weather Insights API service (provided by the Weather Channel), I thought two pieces of data would be useful. “What is the weather?” returns the sky cover and temperature. “When is the sunset?” returns the time the sun will set, or has set if it the time has passed.

I also added a skillset that used the AlchemyAPI to analyze the positive/negative sentiment and return a response like “I’m happy to hear that,” or “I’m sorry to hear that.”

Okay, this seems pretty simple. I can scale these skills to construct some complex conversations, right?

Not exactly. When I had others try it out, they added slight variations. “When does the sun set?” or “What time does the sun set?” or “Simon, what is the weather?” Slight variations of the same basic question doesn’t make a simple this or that comparison possible.

I started to realize that not only is the English language pretty complex, but there are so many different ways of saying the same thing!

And there’s also another thing that I’ve realized watching this interaction happen with Simon. In the past couple of years, more and more voice-enabled products have trained us in different ways. Sometimes we mention the company’s name (Ok Google, navigate home), or sometimes we mention the product’s name (Alexa, what time is the game on?), to activate the listening capacity. Some of these products have a finite number of inputs, but there are quite a few products that let you say practically anything. And this is where a lot of processing power in the Cloud comes into play. Given lots and lots of information, finding an answer becomes a Big Data problem, part of which entails determining the probability that what is being asked could really mean this or that.

So does this mean it’s back to the drawing board? Not quite. Simon is the next step in an evolution. From visual to now being enabled with speech, the next step in the project entails finding more automated ways of teaching Simon about the world. Hopefully it won’t take twenty years and a college education for Simon to become that smart. Stay tuned.