Wordsprout with HP Sprout and IBM's Text to Speech

July 20, 2015 |

This weekend I attended Angelhack’s Silicon Valley hackathon held at HP in Sunnyvale. There were a handful of sponsors including HP, IBM, Respoke, ClusterPoint, SparkPost, and Linode. With so many sponsors, it was hard to choose what to make.

But as I walked into the hackathon, I just couldn’t walk past one table in particular without stopping. Four HP Sprout machines were sitting there waiting to be used. I had seen and played with a Sprout machine at another developer event. There was even a hackathon that focused on using that, but I had a prior commitment and couldn’t make it to the hackathon. So I was intrigued to play with it a little more. An HP evangelist immediately introduced himself and started demonstrating the unit.

HP’s Sprout machine is a dual screen experience. The regular monitor is a touch screen. Above it is an arm that consists of a light and a camera, pointing down to a reflective mat. The second screen is projected onto this mat.

Objects projected onto this mat can be manipulated. If you place a physical object like a sea star onto the mat, you can scan it using the overhead camera and make it a digital object that you can then manipulate. There are programs on the Windows machine that let you manipulate physical objects you scan as digital objects in a variety of different ways.

HP Sprout

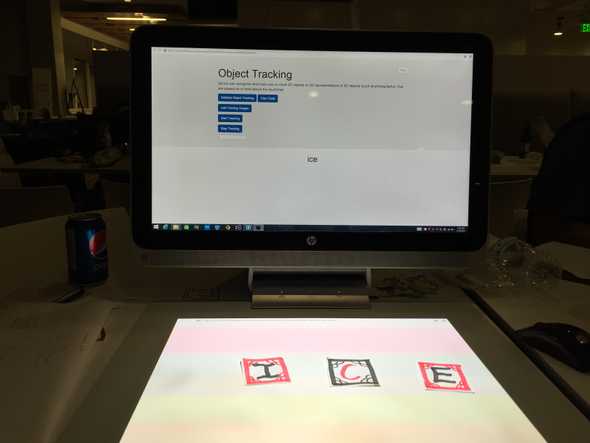

I started with the Object Tracking sample project for JavaScript. This sample project demonstrated how to recognize objects and how to set ids for each scanned object.

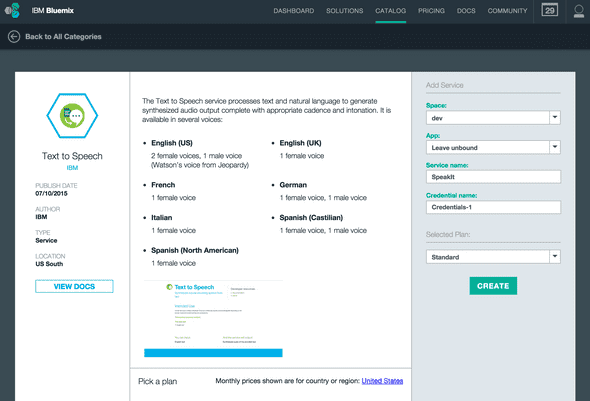

IBM Text to Speech API

I used the Text to Speech API from IBM Bluemix. You can find instructions on how to set that up in my 15th project of my 15 projects in 30 days challenge.

Wordsprout takes takes the word being composed and passes it to the Text to Speech API. The API returns a wav file that is played using the HTML5 audio tag.

Setup

This project can only run on an HP Sprout machine. It uses the JavaScript SDK adapter that connects to C# and C++ bindings which control the camera, capturing images and tracking where the objects move on the mat. There is a nw.exe executable file that starts up a Chromium application and launches both HTML pages.

After installing the JavaScript SDK replace screen.html and mat.html. Include the objects folder that contain the image assets for the words. Ideally, this folder wouldn’t exist and instead use remotely hosted images that represent any words that combinations of letters available could make.

<!-- Filename: screen.html -->

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0, user-scalable=no">

<meta name="description" content="">

<meta name="author" content="">

<title>wordsprout</title>

<link href="http//fonts.googleapis.com/css?family=Lato:100italic,100,300italic,300,400italic,400,700italic,700,900italic,900" rel="stylesheet" type="text/css">

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.4/css/bootstrap.min.css">

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/ladda-bootstrap/0.9.4/ladda-themeless.min.css">

<!-- JQuery and Bootstrap are used in this example, but not required -->

<script src="http://ajax.googleapis.com/ajax/libs/jquery/1.11.2/jquery.min.js"></script>

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.4/js/bootstrap.min.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/ladda-bootstrap/0.9.4/spin.min.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/ladda-bootstrap/0.9.4/ladda.min.js"></script>

</head>

<body>

<div><img src="wordsprout.png" style="max-height:60px"></div>

<div class="title">Points: <span style="padding:5px 20px; margin-left:30px; border:1px solid white" id="score">0</span></div>

<div class="word-text hide">

<div class="instr" style="font-size: 30pt">Spell the word you hear.</div>

<div class="instr hide" id="correct-word" style="font-size: 70pt; margin-top: 50px"></div>

</div>

<div class="word-pic">

<div class="instr" id="word">Compose a word:</div>

<div class=""><img id="pictoshow" src="" style="max-height: 500px"></div>

</div>

<div class="jumbotron">

<div class="container">

<button type="button" class="btn btn-default pull-right" id="closeApp">Close</button>

<p><button id="init" class="btn btn-primary btn-lg ladda-button" data-style="expand-right" onclick="initialize();">

<span class="ladda-label">Initialize Object Tracking</span></button>

<button id="button1" class="btn btn-primary btn-lg ladda-button" data-style="expand-right" onclick="addImages();">

<span class="ladda-label">Add Training Images</span></button> <button id="button2" class="btn btn-primary btn-lg ladda-button" data-style="expand-right" onclick="start();">

<span class="ladda-label">Start Tracking</span></button> <button id="button3" class="btn btn-primary btn-lg ladda-button" data-style="expand-right" onclick="stop();">

<span class="ladda-label">Stop Tracking</span></button></p>

Representation: <input type="text" id="letter" />

</div>

</div>

<div class="modal fade hide" id="codeTracking" tabindex="-1" role="dialog" aria-labelledby="codeModalLabel" aria-hidden="true">

<div class="modal-dialog">

<div class="modal-content">

<div class="modal-header">

<button type="button" class="close" data-dismiss="modal" aria-label="Close"><span aria-hidden="true">&times;</span></button>

<h4 class="modal-title" id="codeModalLabel">Code for Object Tracking</h4>

</div>

<pre class="modal-body" id="code_tracking">

</pre>

<div class="modal-footer">

<button type="button" class="btn btn-default" data-dismiss="modal">Close</button>

</div>

</div>

</div>

</div>

<div class="container">

<div class="alert alert-danger alert-dismissible fade in" role="alert" id="capture_error">

<button type="button" class="close" data-dismiss="alert" aria-label="Close"><span aria-hidden="true">&times;</span></button>

<p id="error_message"></p>

</div>

<div class="row text-center">

<h3 id="tracked-heading">Object Data</h3>

<div id="outline-result" class="bg-info"></div>

</div>

</div>

<audio controls preload="auto" id="audio">

<source id="wavsource" type="audio/wav" />

</audio>

<div id="message" style="font-size:36pt; text-align:center"></div>

<script>

var sprout = require("sprout");

var wordnet = require("wordnet");

var async = require("async");

var responseAnswer = "";

var score = 0;

var IBM_TEXT_TO_SPEECH_ENDPOINT = "http://0.0.0.0:8080/api/speak?text=";

window.checkAnswer = function() {

wordnet.lookup(responseAnswer, function(err, definitions) {

if(definitions) {

score++;

$("#score").text(score);

$("#pictoshow").attr("src", "objects/"+responseAnswer);

document.getElementById("wavsource").src = IBM_TEXT_TO_SPEECH_ENDPOINT+encodeURIComponent(responseAnswer);

document.getElementById("audio").load();

document.getElementById("audio").play();

} else {

$("#message").text("incorrect");

}

});

};

var matHandle = sprout.openMat("mat.html");

var l = Ladda.create(document.querySelector("#button1"));

var spinner = Ladda.create(document.querySelector("#button2"));

var spinner1 = Ladda.create(document.querySelector("#button3"));

var spinner2 = Ladda.create(document.querySelector("#init"));

var objCount = 0;

var objName = "";

var letters = [];

var pieces = [];

var onboard = [];

$(document).ready(function() {

$("#capture_error").hide();

$("#tracked-heading").hide();

$("#button1").hide();

$("#button2").hide();

$("#button3").hide();

$.get("code.txt", function(data, err) {

if(data) {

$("#code_tracking").text(data);

} else {

showError("Failed to read text file.")

}

});

});

function initialize() {

spinner2.start();

$("#tracked-heading").hide();

$("#outline-result").html("");

sprout.initializeObjectTracker().then(function() {

spinner2.stop();

$("#outline-result").html("Object tracking initialized.");

$("#button1").show();

$("#init").remove();

}).fail(function(e) {

//error message

showError(e.message);

})

}

function addImages() {

l.start();

$("#tracked-heading").hide();

$("#capture_error").hide();

$("#outline-result").html("");

sprout.capture().then(function(id) {

pieces.push($("#letter").val());

var r = sprout.addTrainingImages("o"+objCount, id);

objCount++;

return r;

}).then(function(data) {

console.log(data);

l.stop();

//will return a boolean value to indicate whether or not image was added successfully

if(data == true) {

$("#button2").show();

$("#button3").show();

}

$("#outline-result").html("Object captured successfully.");

}).fail(function(e) {

//error message

showError(e.message);

})

}

function display(data) {

onboard = [];

responseAnswer = "";

for(var i=0; i<data.TrackedObjects.length; i++) {

objectId = parseInt(data.TrackedObjects[i].Name.slice(1, - 1));

onboard[objectId] = [Math.floor(data.TrackedObjects[i].PhysicalBoundaries.Location.X), objectId];

onboard.sort(function(a, b) { return a[0] - b[0] });

}

var o = onboard.slice(0);

o.sort(function(a, b) { return a[0] - b[0] });

var t = "";

for(var k in o)

{

t += pieces[o[k][1]];

}

responseAnswer = t;

$("#word").text(responseAnswer);

spinner.stop();

}

function start() {

$("#tracked-heading").hide();

spinner.start();

$("#outline-result").html("");

sprout.startTracking(display).then(function(data) {

spinner.stop();

}).fail(function(err) {

//error message

showError(e.message);

})

}

function stop() {

spinner1.start();

sprout.stopTracking();

spinner1.stop();

}

function showError(error) {

l.stop();

spinner.stop();

spinner1.stop();

spinner2.stop();

$("#tracked-heading").hide();

$("#outline-result").hide();

$("#error_message").html(error);

$("#capture_error").show();

}

$("#closeApp").click(function() {

matHandle.close();

window.close();

});

initialize();

</script>

</body>

</html><!-- Filename: mat.html -->

<html>

<head>

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.4/css/bootstrap.min.css">

</head>

<body>

<div style="position:absolute; bottom:10px; width:100%; text-align:center">

<input type="button" onclick="opener.checkAnswer()" value="Identify" class="btn btn-primary" style="font-size:36pt" /></div>

</body>

</html>Source Code

You can find the repo on GitHub.

Wordsprout

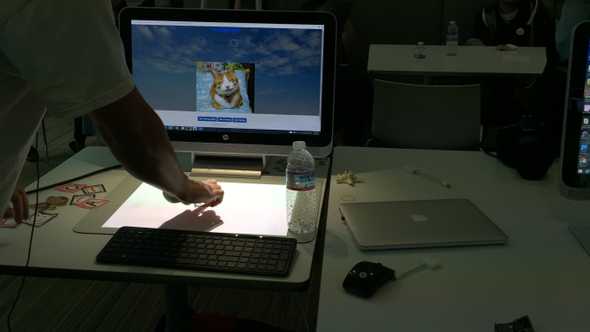

The objective of Wordsprout is to make words using letters on the mat (or pictures of objects) and to recognize them visually and orally. I drew the letters D, O, G on three pieces of paper, decorated them to be like playing cards, and scanned each one into the Object Tracking system. I associated each card with a value of the letter it had on it.

This is called adding training images. By training the system what each card is supposed to look like, and training Wordsprout what each card represents via the textbox input, Wordsprout gains the ability to recognize these objects when they reappear on the mat.

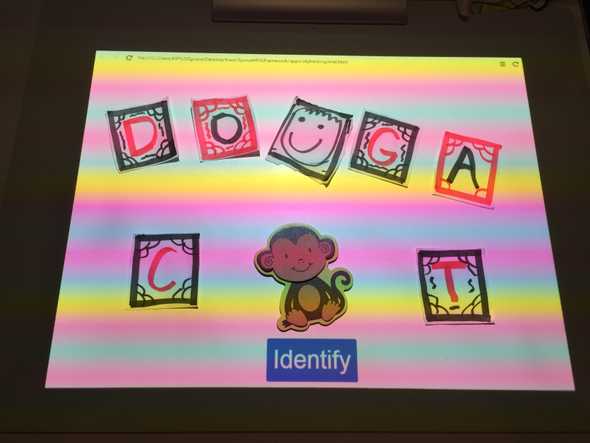

To start playing, start the tracking functionality by clicking on Start Tracking. This instructs Wordsprout and the HP Spout SDK to start looking for objects that it has been trained with. As the cards are placed onto the mat, the letters are placed in the same order on the page on the monitor. If you change the order of the cards, the letters on the screen change accordingly.

Clicking on the Identify button on the mat will trigger Wordsprout to check the constructed word. It uses the wordnet NodeJS module to check for a definition. If so, a point is awarded. A picture is loaded on the object that was spelled. And the audio representation of the word is played from IBM’s Text to Speech API.

Running out of time at the hackathon, I couldn’t find an API that would provide a reliable picture given a name of an object. I downloaded pictures of objects which the letters given would make.

The other way to use Wordsprout is to scan objects like a monkey. I associated a monkey with the word monkey. When the piece was placed on the mat, Wordsprout displays a picture of a money and plays the word monkey.

By providing the textbox, I opened up a endless number of possibilities with the system. Scan any object, associate any name to it, and each time the object is placed on the mat, a picture is showed and audio representation of the object is played.

Another expansion that would be interesting to try out is to prompt the child to put a word or object on the mat by just playing the audio representation. For example, “Spell DOT” or “Place the monkey on the mat.” When the correct object is placed on the mat, a point is awarded.

Post Mortem

HP’s Sprout is a fascinating tool to enable hands-on learning. There are so many possibilities for children to engage with different types of activities using real manipulatives. There is research that show manipulatives add value to the learning process and engage different parts of the mind.

I also was really impressed that the JavaScript library worked so well. It was only recently released. The only thing our team and the Sprout team couldn’t figure out was how to scan multiple objects at the same time and split them up. This would enable putting letters D O G cards on the mat, and spelling DOG in the textbook in one scan instead of three separate scans. Wordsprout would split the letters up accordingly. In fact, I now wonder if OCR could be added to this process.

And it’s been awhile since I did an all nighter. It was worth it. A beautiful sunrise is always nice to see, even if blurry-eyed.