Last week I showed how I connected several pieces of hardware together for a demo at the Samsung Developer Conference. Much of the demo reused pieces that I’ve demoed previously. This blog post explains in more technical detail how to connect the various parts together.

SmartThings Hub

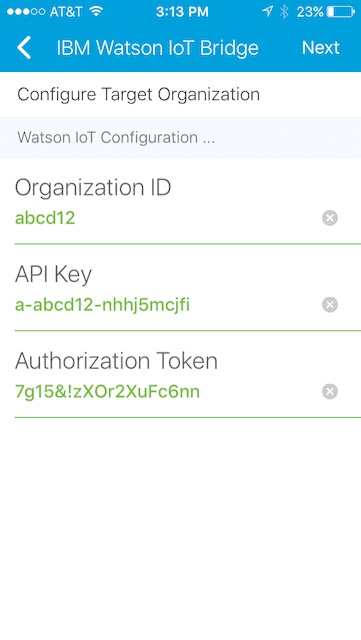

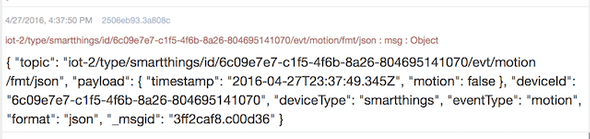

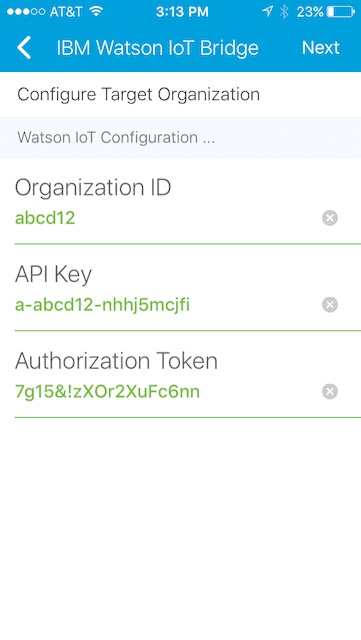

At the core of the demo is the SmartThings Hub. The door, window, and motion sensors, along with the outlet, trigger events that are sent up to the Watson IoT Platform. I first created a SmartThings application using the gateway app located in the ibm-watson-iot GitHub. Setup is pretty simple. Enter the credentials to the Watson IoT Platform and select what sensors should be used to track. The application automatically registers devices in the Platform and manages the process of sending events up to the Platform.

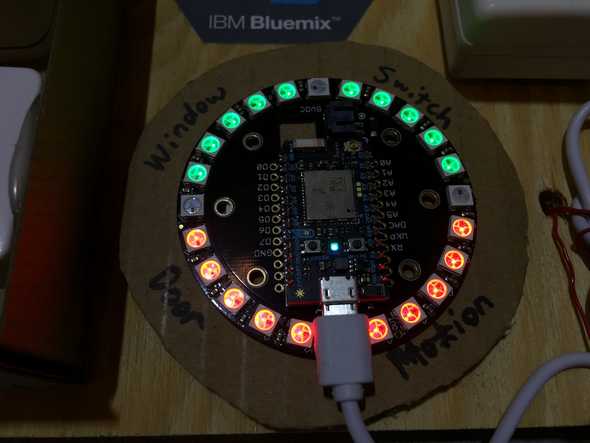

LED Ring

Using the Arduino code I wrote for the Tone LED Pin, I added the color black (the LED light is turned off), commented out the animateWipeClean function, and moved the FastLED.show() line as shown:

void callback(char* topic, byte* payload, unsigned int length) {

// Sets the color for each pin based on the message from the Watson IoT Platform

for(int i=0; i<length; i++) {

switch(payload[i]) {

case 'r': leds[i] = CRGB::Red; break;

case 'g': leds[i] = CRGB::Green; break;

case 'b': leds[i] = CRGB::Blue; break;

case 'y': leds[i] = CRGB::Yellow; break;

case 'p': leds[i] = CRGB::Purple; break;

case ' ': leds[i] = CRGB::Black; break;

}

}

FastLED.show();

}

Register this device in the Watson IoT Platform with a device type of ledpin.

Intel Edison

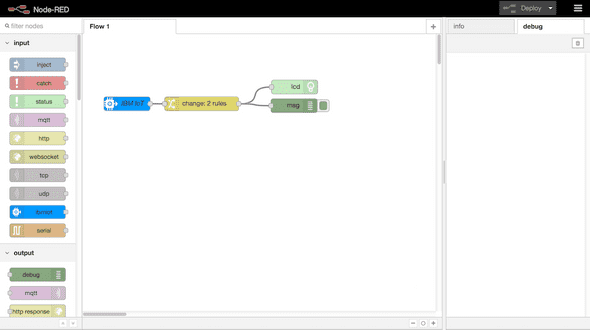

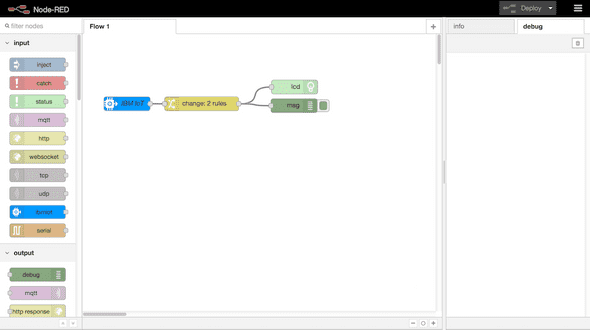

The Intel Edison has a LCD screen connected to the I2C port. Node-RED is installed with the node-red-contrib-grove-edison and node-red-contrib-scx-ibmiotapp nodes. The command to display messages comes into Node-RED via the ibmiot input node. The values of line1 and line2 are displayed via the LCD node.

Here’s the Node-RED flow JSON:

[{"id":"51d31af.698bee4","type":"ibmiot","z":"a6a7a597.21b96","name":"ls80t2"},{"id":"82cecd38.8aa928","type":"change","z":"a6a7a597.21b96","name":"","rules":[{"t":"set","p":"line1","pt":"msg","to":"payload.line1","tot":"msg"},{"t":"set","p":"line2","pt":"msg","to":"payload.line2","tot":"msg"}],"action":"","property":"","from":"","to":"","reg":false,"x":257.95001220703125,"y":151.20001220703125,"wires":[["9af32516.06ef48","2406d90f.a17b0e"]]},{"id":"9af32516.06ef48","type":"lcd","z":"a6a7a597.21b96","name":"","port":"0","line1":"Loading...","line2":"","bgColorR":255,"bgColorG":255,"bgColorB":255,"x":458.7833557128906,"y":114.9166488647461,"wires":[]},{"id":"4c925ea.04995a","type":"ibmiot in","z":"a6a7a597.21b96","authentication":"apiKey","apiKey":"51d31af.698bee4","inputType":"cmd","deviceId":"","applicationId":"","deviceType":"edison","eventType":"+","commandType":"display","format":"json","name":"IBM IoT","service":"registered","allDevices":"","allApplications":"","allDeviceTypes":"","allEvents":true,"allCommands":"","allFormats":"","x":96.70001220703125,"y":151.06668090820312,"wires":[["82cecd38.8aa928"]]},{"id":"2406d90f.a17b0e","type":"debug","z":"a6a7a597.21b96","name":"","active":true,"console":"false","complete":"true","x":457.6999816894531,"y":155.23333740234375,"wires":[]}]

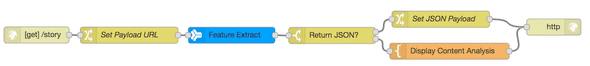

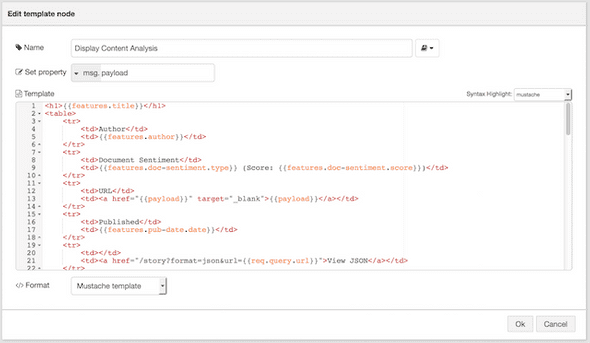

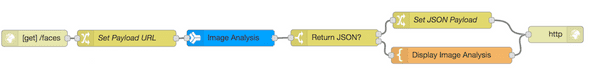

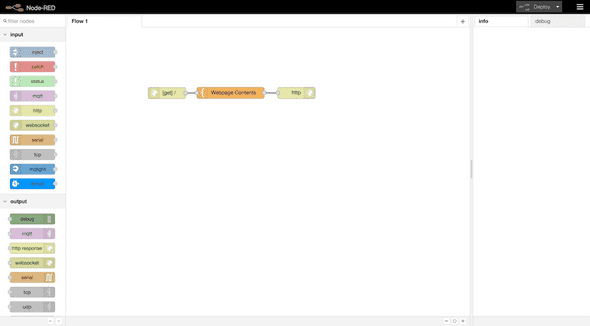

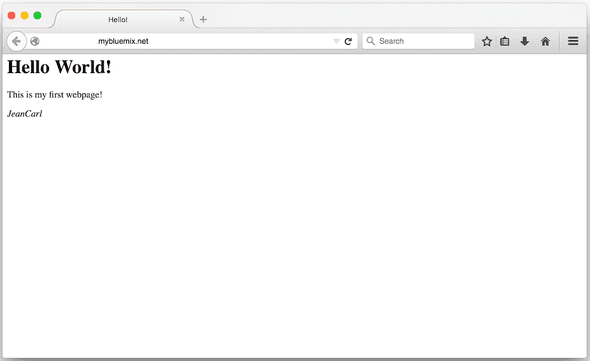

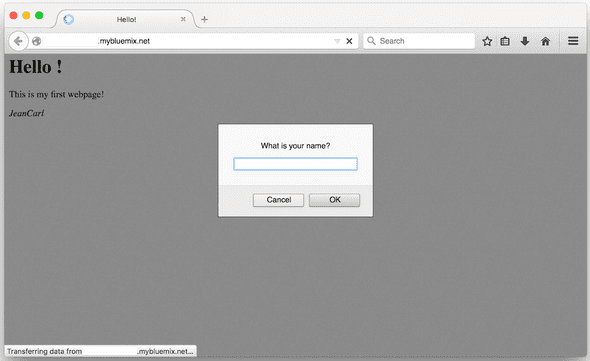

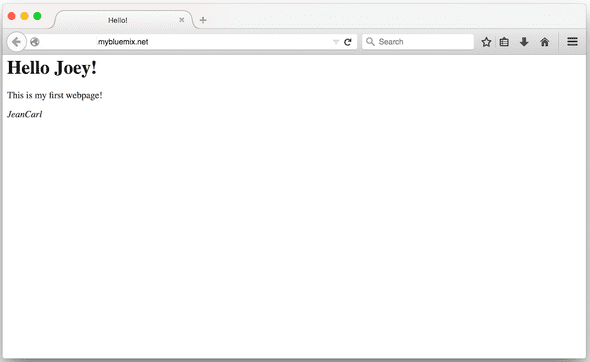

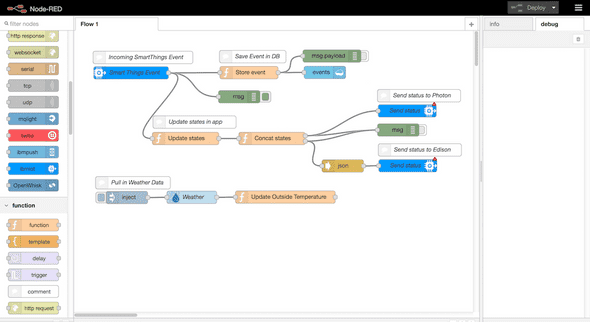

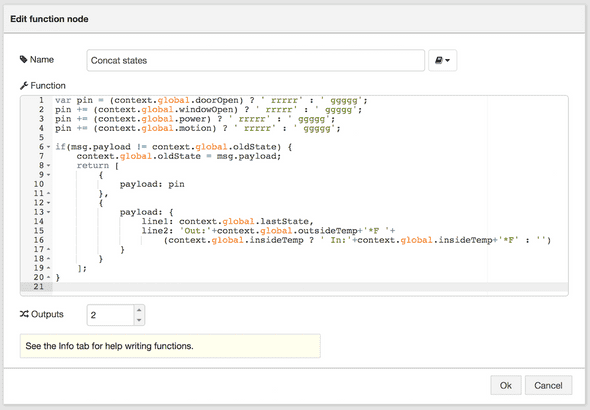

Node-RED

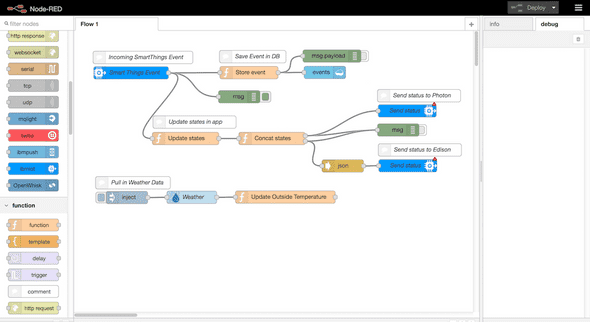

Much of the logic in the demo is performed in a Node-RED application in IBM Bluemix.

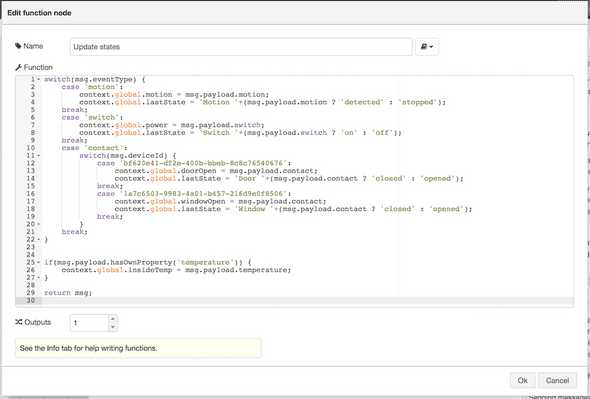

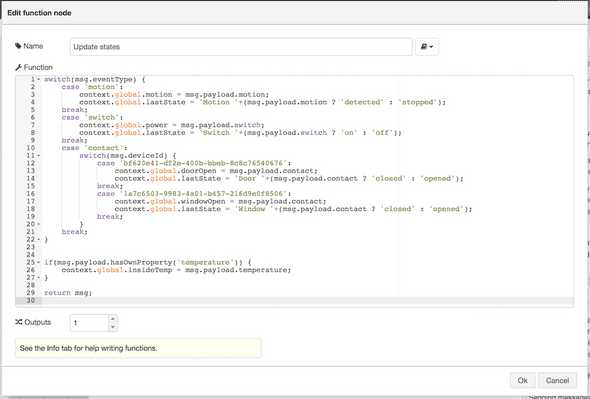

An ibmiot input node that listens to the device type smartthings and receives all the incoming sensor data. The guts of the application is located in the Update state node. Four global variables track:

- context.global.motion - motion has been sensed

- context.global.power - power is being used

- context.global.doorClosed - door is closed

- context.global.windowClosed - window is closed

If there is a temperature property in the event, I update the indoor temperature value that is stored in context.global.insideTemp.

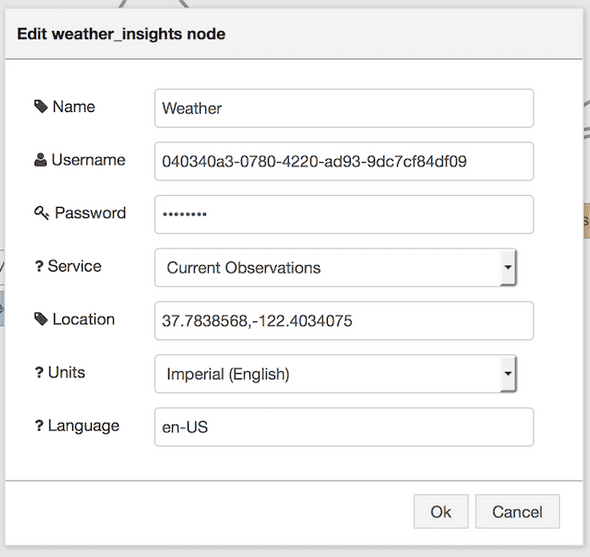

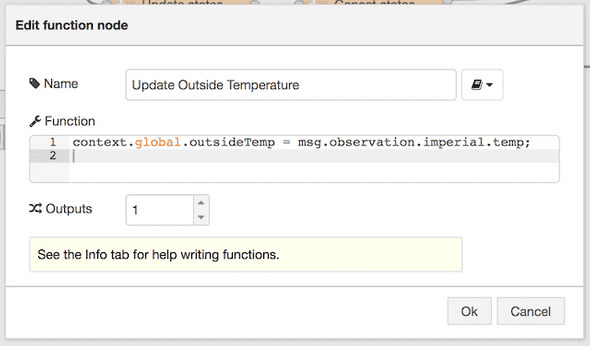

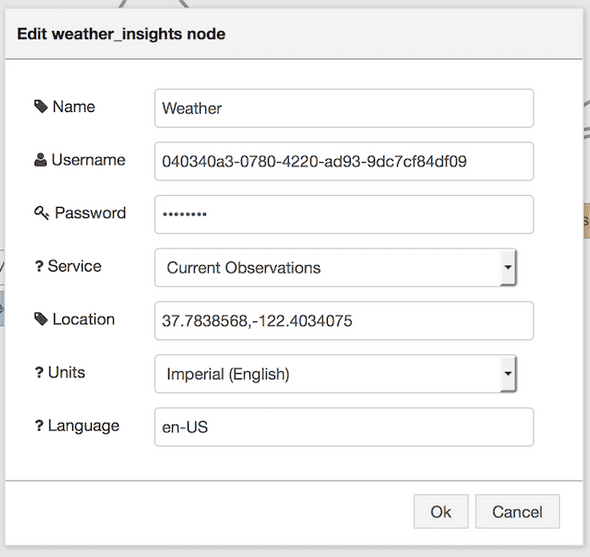

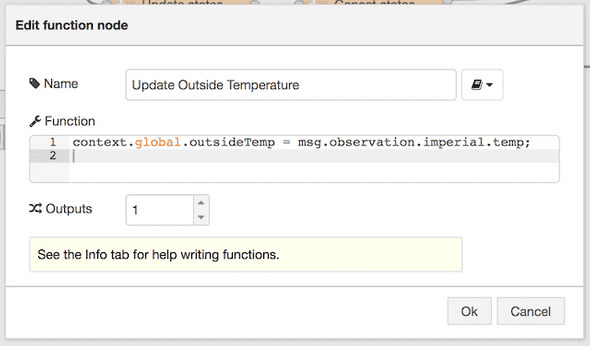

One last piece of data that is used is the outside temperature. Using the Weather Insights node, I pass the location to the Weather Channel API. I store the temperature in the global variable context.global.outsideTemp for use by the Intel Edison.

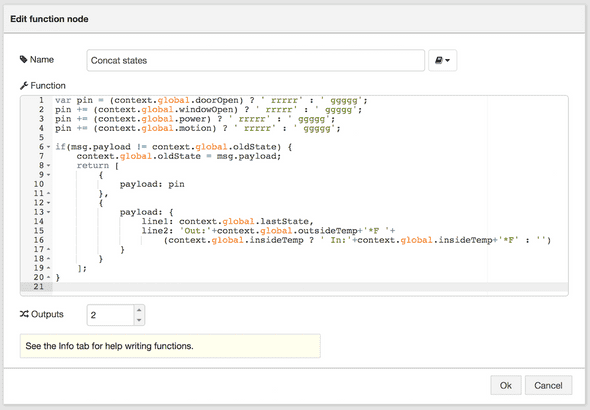

When a sensor emits an event to the Watson IoT Platform, the Node-RED application sends two messages out. First, the application constructs a string of 24 characters for the LED Ring. If the sensor is active (opened, motion sensed, or outlet is powered), I used the color red to convey some part of the home isn’t in a secured state. Otherwise, the color green is used. This string is sent as a command to the LED Ring.

The remaining code in this node composes a message that is sent to the Intel Edison. Three pieces of information are used:

- context.global.lastState - contains the last action that the SmartThings sensors emitted to the Platform

- context.global.outsideTemp - contains the Fahrenheit temperature outside

- context.global.insideTemp - contains the Fahrenheit temperature inside

It was an interesting project to combine the different components together. It was also pretty simple to connect these separate components together via the Watson IoT Platform. There plenty of other things I could have connected together. What can you connect?